100 Days of YARA - 2023

Note

This is a consolidated post of all 100 days of posts from 2023. These fall roughly into the following sections:

- Days 1-4 - Introduction to YARA modules

- Days 5-30 - Highlighting the YARA LNK module

- Days 31-34 - Hex values in text string tips

- Days 35-37 - YARA atoms

- Days 38-45 - YARA math module

- Days 46-57 - YARA command line flags

- Days 58-75 - File classification in YARA

- Days 76-77 - YARA rule writing philosophy

- Days 78-99 - Greg’s challenge: AcidBox framework rules

- Day 100 - Wrapup, and index of coincidence

Day 1 - 100 Days of YARA

I’m a big fan of YARA. It’s a tool that makes it possible for anyone interested to write static signatures, whether to classify specific strains of malware, perform broader threat hunting, or even parsing files.

In 2022, Greg Lesnewich started #100DaysofYARA; an initiative, similar to #100DaysOfCode, to engage with YARA for the first 100 days of the year. This can either be writing YARA rules, contributing to the source code, creating tools to help automate YARA, or generally learning more about the tool/helping teach others.

Last year, I didn’t contribute to the full 100 days, but was inspired by the event to create a module in YARA to parse LNK files: https://github.com/VirusTotal/yara/pull/1732

This year, I’d like to highlight this module and what it’s capable of, as well as trying to contribute on as many of the days that I can!

To start with, I’d always recommend starting by looking through the YARA documentation to see how to write rules. This is a document I spend a lot of time in: https://yara.readthedocs.io/en/stable/writingrules.html

I’d also recommend checking out Florian Roth’s guide for YARA performance, which can give some good insight into why rules like the following are not great:

import "math"

rule CPU_Eater {

meta:

description = "Please don't actually use this rule, it's realllllly bad"

condition:

for all j in (0 .. filesize) : (

for all i in (0 .. j) : (

math.entropy(i, j) > 0

)

)

}

And with that terrible rule out of the way, we can start moving on to more useful content from today onwards!

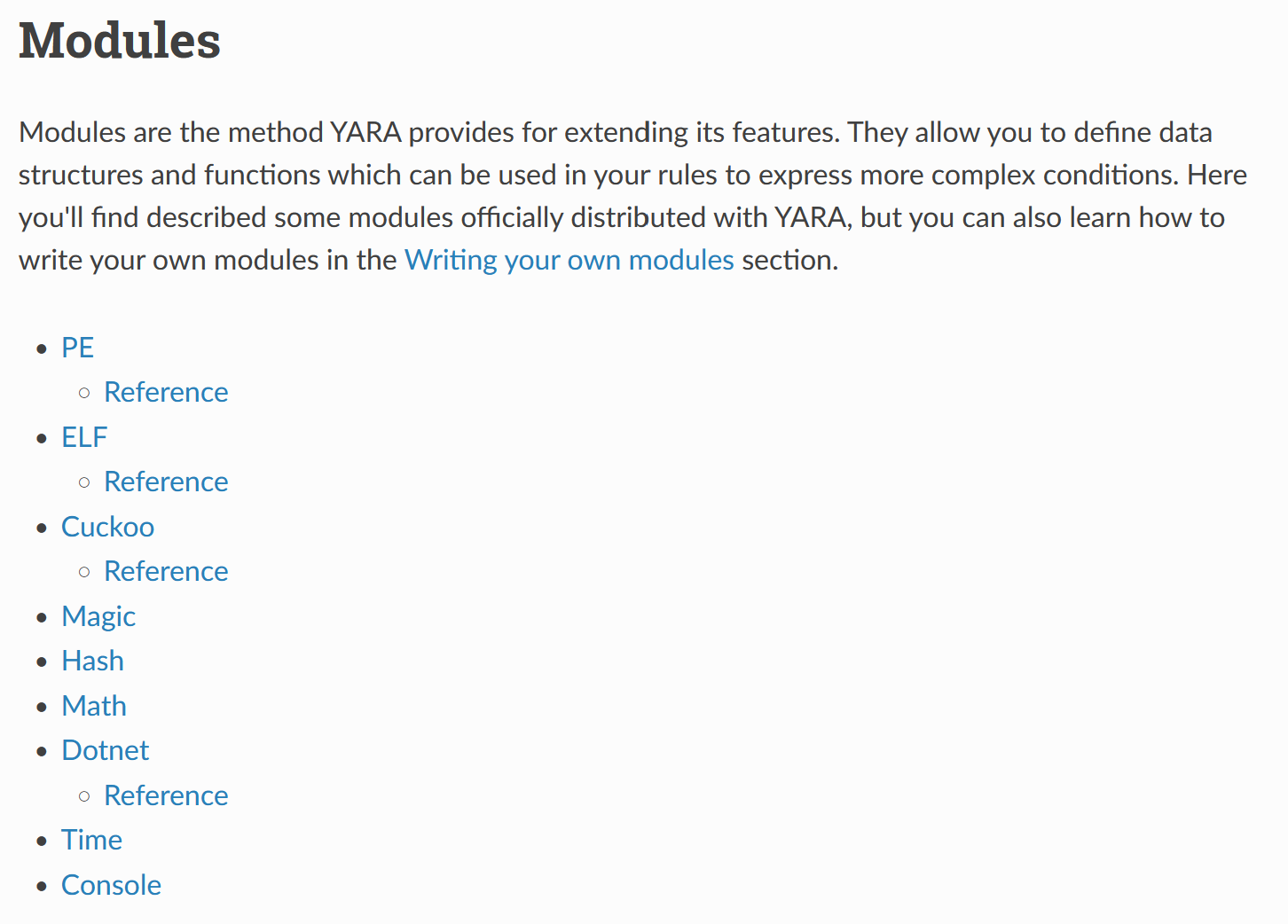

Day 2 - YARA Modules

YARA is primarily designed to be used for efficient string matching (which it does very well). But one of its most powerful features (in my opinion) is the ability to create and use modules. These can be used for file parsing (such as PE, ELF or .NET binaries), utility functions to aid in writing rules (such as hashing algorithms, or maths functions), to help debug your rules (e.g. via the console module), or anything you can think of that can be written in C using YARA’s API!

Personally, I find myself using the PE module the most, which also happens to be the most built out default YARA module. I highly recommend reading through its documentation to see what it can do, and if there’s something missing then raise an issue on GitHub! There are many active contributors to YARA who will be willing to try and implement it.

I’ll give some example rules using the PE module over the next couple of days to give some inspiration for how they can be used!

Day 3 - YARA Module Example 1 - Imphash and Rich Header Hash

A simple, yet effective way of using the PE module to cluster samples is via hash values of specific components of the PE: namely, the import hash (or imphash) and the rich header hash.

Both of these hash values can prove to be quite unique, and make it possible write YARA based off them. For example, if I take the SHA-256 hash a37a290863fe29b9812e819e4c5b047c44e7a7d7c40e33da6f5662e1957862ab from a report by Mandiant on APT42, we can write the following the rule which can be used to cluster further samples:

import "pe"

import "hash"

rule APT42_CHAIRSMACK_PE_Metadata {

meta:

description = "Detects samples of CHAIRSMACK based on unique PE metadata (i.e. imphash and rich PE header hash)"

reference = "https://mandiant.com/resources/blog/apt42-charms-cons-compromises"

hash = "a37a290863fe29b9812e819e4c5b047c44e7a7d7c40e33da6f5662e1957862ab"

condition:

pe.imphash() == "72f60d7f4ce22db4506547ad555ea0b1" or

hash.md5(pe.rich_signature.clear_data) == "c0de41e45352714500771d43f0d8c4c3"

}

I’ve written a script that can generate rules from these values (making use of pefile which makes this parsing very straightforward!), and you can also get these values from other platforms that parse files, such as VirusTotal, AlienVault, MalwareBazaar, and so on!

So next time you are about to write some rules for a PE binary, use the imphash and rich header hash for some quick and easy rules! These shouldn’t be intended to replace more rigorous rules (i.e. based on strings, code segments, anomalies, etc.), but can build in some redundancy into your detection capabilities.

Disclaimer

As always with YARA rules, test to make sure your rules behave as expected! Blindly using an imphash or rich header hash might get you lots of false positives. E.g., the imphash for a .NET PE binary (f34d5f2d4577ed6d9ceec516c1f5a744) will be the same across many different files, due to them all importing the same library (mscoree.dll) and the same function from that library (_CorExeMain).

Day 4 - YARA Module Example 2 - PDB Paths

PDB files are generated by Visual Studio for the purposes of debugging. As such, binaries generated using the Debug option in Visual Studio will have a hardcoded path to where the PDB file lies. This path can reveal some useful information, such as the drive/username of the user that compiled the file, or internal names given to the project.

All kinds of heuristics can be used on PDB paths (see Steve Miller’s Swear Engine), so it’s worth trying to see what weird things you can find!

import "pe"

rule Heuristic_PE_PDB_Self_Identifying_as_Malware {

meta:

description = "Detects files that identify themselves as malware"

condition:

pe.pdb_path icontains "malware"

}

Note: icontains is an operator that acts as a case-insensitive contains. If you’ve not seen this operator, I highly recommend checking out all the operators listed in the YARA documentation; there are some really useful ones!

For a more practical example, we could consider the SessionManager IIS backdoor as reported on by Kaspersky. In the IoCs section of this report, they list the following PDB paths:

C:\Users\GodLike\Desktop\t\t4\StripHeaders-master\x64\Release\sessionmanagermodule.pdbC:\Users\GodLike\Desktop\t\t4\SessionManagerModule\x64\Release\sessionmanagermodule.pdbC:\Users\GodLike\Desktop\t\t4\SessionManagerV2Module\x64\Release\sessionmanagermodule.pdbC:\Users\GodLike\Desktop\t\t4\SessionManagerV3Module\x64\Release\sessionmanagermodule.pdbC:\Users\GodLike\Desktop\t\t0\Hook-PasswordChangeNotify-master\HookPasswordChange\x64\Release\HookPasswordChange.pdb

As you can see, there are some strings across these PDBs, such as \GodLike\, \t\t[0-9]\, and sessionmanagermodule.pdb. It can sometimes take some trial and error to figure out which segments of a PDB path will be good to signature, but a first pass at writing a rule making use of the PE Module could be as follows:

import "pe"

rule SessionManager_IIS_Backdoor_PDB_Path_Segments {

meta:

description = "Detects the SessionManager IIS backdoor based on some unique PDB path segments"

reference = "https://securelist.com/the-sessionmanager-iis-backdoor/106868/"

condition:

pe.pdb_path contains "\\GodLike\\" or

pe.pdb_path matches /\\t\\t[0-9]\\/ or

pe.pdb_path endswith "\\sessionmanagermodule.pdb"

}

Of course you could write a rule that looks for these strings in general within a sample without using the PE module, but the regex \t\t[0-9]\ might not perform as efficiently on larger samples as opposed to just evaluating on the much smaller PDB path variable.

Day 5 - Introducing the YARA LNK module

The Windows Shell Link file format (or LNK) has been used by threat actors for years for malicious purposes (reference: https://attack.mitre.org/techniques/T1204/001/)! Whether to download a next-stage payload, or set persistence on an infected system, the LNK file format can be quite versatile. It has also seen an uptick in use as part of initial infection chains due to Microsoft disabling macros by default from documents downloaded from the internet.

With all this combined, I wanted to put together a YARA module for LNK files to aid defenders in being able to triage, parse, and detect them.

The next few weeks of posts will go into detail of how to use the module, and some rules that are possible to write using it. Along the way, we’ll hopefully learn some cool features of the LNK file format that will be useful to consider in general (check out Greg Lesnewich’s #100DaysofYARA contributions so far to see some cool LNK rules).

Shoutouts

I wouldn’t have been able to write this module without being able to see the source code of other YARA modules, and through the variety of currently available LNK parsers to help validate my output (such as exiftool or Silas Cutler’s LnkParse Python module).

Also shoutouts to Ollie Whitehouse who gave me some great tips to avoid bugs in my C code (which was very much needed!), and Wesley Shields for an early tip to make sure I didn’t do silly things dereferencing pointers in C.

And of course, shoutout to Victor Alvarez and all the YARA maintainers for creating and developing this awesome tool!

Acknowledgments

While I think the LNK module will give a great deal of flexibility to writing YARA rules for LNK files, a great deal of work has already done by others to write rules for LNKs! Please go check out rules from the following authors:

- Bart (@bartblaze): https://github.com/bartblaze/Yara-rules/blob/master/rules/generic/LNK_Ruleset.yar

- Florian Roth (@cyb3rops): https://github.com/Neo23x0/signature-base/blob/05ef26965be930fade49e5dcba73b9fefc04757e/yara/gen_susp_lnk_files.yar

If you know of any other open source LNK YARA rulesets, please give me a shout and I can update this page with them! Check out the repo set up for #100DaysofYARA to see some further LNK rules available as well: https://github.com/100DaysofYARA/2023

Day 6 - Installing YARA with the LNK module

The LNK is currently not included by default with YARA; at the time of writing, it is still awaiting approval to be merged in via a pull request on GitHub.

As such, if you want to test out the LNK module, you’ll need to:

- Clone the LNK module branch from here: https://github.com/BitsOfBinary/yara/tree/lnk-module

- Follow the instructions in the docs to install YARA: https://yara.readthedocs.io/en/stable/gettingstarted.html

The rough set of commands you’ll need to run if installing on Linux are as follows:

sudo apt-get install automake libtool make gcc pkg-config flex bison

./bootstrap.sh

./configure

make

sudo make install

You can optionally run make check to see if all the tests pass for YARA as well, although if you’re able to run yara --help at this stage as see output then you’ll know it has compiled and installed correctly!

The LNK module is available for any operating system, so you can compile it as normal for Linux/macOS, or use the Visual Studio projects to build it for Windows. @r0ny_123 also pointed out to me that you can grab Windows binaries from the AppVeyor builds (i.e. part of the regular CI/CD applied to YARA), e.g.: https://ci.appveyor.com/project/plusvic/yara/build/job/wthlb30bklmlns0a/artifacts

You can test if it the LNK module itself is working properly by trying to run the following rule and making sure there are no errors:

import "lnk"

rule test {

condition:

filesize > 0

}

The branch that the LNK module is on will install it by default, so you don’t need to add any flags to configure when compiling YARA.

I’m aiming to keep the LNK module branch up to date with the main branch of YARA, so all other features of YARA will be available if you compile the LNK module branch!

Troubleshooting

Let me know if you have any issues installing the module. Personally I’ve found that when compiling via WSL on Windows that the ./bootstrap.sh command doesn’t work as expected, but if I manually run the command inside the script file, that is autoreconf --force --install, then it works as expected!

Any feedback on the module?

If you have any feedback on the module (whether suggestions for how it could be used, support for it being merged in, etc.) please feel free to drop a comment on the open pull request on GitHub!

I hope that the module will be merged in by default into YARA one day (or at least, optionally available when compiling YARA from source).

Day 7 - Parsing an LNK file with the LNK module

The nice thing about the YARA file parsing modules is that you can output the populated variables by adding the -D flag on the command line. If you would like to test this out, and don’t have LNKs to hand, then sample LNK files can be found in the tests\data directory of the LNK module branch (all prefixed with lnk-). So if you save the following rule to test_lnk.yar:

import "lnk"

rule test {

condition:

filesize > 0

}

And run the command yara -D test_lnk.yar tests/data/lnk-standard (where lnk-standard is provided in the LNK documentation), you should see the following output:

lnk

is_malformed = 0

overlay_offset = YR_UNDEFINED

has_overlay = 0

vista_and_above_id_list_data

item_id_list

number_of_item_ids = YR_UNDEFINED

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_vista_and_above_id_list_data = 0

tracker_data

droid_birth_file_identifier = "\xecF\xcd{"\x7f\xdd\x11\x94\x99\x00\x13r\x16\x87J"

droid_birth_volume_identifier = "@x\xc7\x94G\xfa\xc7F\xb3V\-\xc6\xb6\xd1\x15"

droid_file_identifier = "\xecF\xcd{"\x7f\xdd\x11\x94\x99\x00\x13r\x16\x87J"

droid_volume_identifier = "@x\xc7\x94G\xfa\xc7F\xb3V\-\xc6\xb6\xd1\x15"

machine_id = "chris-xps"

block_signature = 2684354563

block_size = 96

has_tracker_data = 1

special_folder_data

offset = YR_UNDEFINED

special_folder_id = YR_UNDEFINED

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_special_folder_data = 0

shim_data

layer_name = YR_UNDEFINED

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_shim_data = 0

property_store_data

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_property_store_data = 0

known_folder_data

known_folder_id

offset = YR_UNDEFINED

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_known_folder_data = 0

icon_environment_data

target_unicode = YR_UNDEFINED

target_ansi = YR_UNDEFINED

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_icon_environment_data = 0

environment_variable_data

target_unicode = YR_UNDEFINED

target_ansi = YR_UNDEFINED

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_environment_variable_data = 0

darwin_data

darwin_data_unicode = YR_UNDEFINED

darwin_data_ansi = YR_UNDEFINED

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_darwin_data = 0

console_fe_data

code_page = YR_UNDEFINED

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_console_fe_data = 0

console_data

color_table

history_no_dup = YR_UNDEFINED

number_of_history_buffers = YR_UNDEFINED

history_buffer_size = YR_UNDEFINED

auto_position = YR_UNDEFINED

insert_mode = YR_UNDEFINED

quick_edit = YR_UNDEFINED

full_screen = YR_UNDEFINED

cursor_size = YR_UNDEFINED

face_name = YR_UNDEFINED

font_weight = YR_UNDEFINED

font_family = YR_UNDEFINED

font_size = YR_UNDEFINED

window_origin_y = YR_UNDEFINED

window_origin_x = YR_UNDEFINED

window_size_y = YR_UNDEFINED

window_size_x = YR_UNDEFINED

screen_buffer_size_y = YR_UNDEFINED

screen_buffer_size_x = YR_UNDEFINED

popup_fill_attributes = YR_UNDEFINED

fill_attributes = YR_UNDEFINED

block_signature = YR_UNDEFINED

block_size = YR_UNDEFINED

has_console_data = 0

icon_location = YR_UNDEFINED

command_line_arguments = YR_UNDEFINED

working_dir = "C\x00:\x00\\x00t\x00e\x00s\x00t\x00"

relative_path = ".\x00\\x00a\x00.\x00t\x00x\x00t\x00"

name_string = YR_UNDEFINED

link_info

common_path_suffix_unicode = YR_UNDEFINED

local_base_path_unicode = YR_UNDEFINED

common_path_suffix = "\x00"

common_network_relative_link

device_name_unicode = YR_UNDEFINED

net_name_unicode = YR_UNDEFINED

device_name = YR_UNDEFINED

net_name = YR_UNDEFINED

device_name_offset_unicode = YR_UNDEFINED

net_name_offset_unicode = YR_UNDEFINED

network_provider_type = YR_UNDEFINED

device_name_offset = YR_UNDEFINED

net_name_offset = YR_UNDEFINED

flags = YR_UNDEFINED

size = YR_UNDEFINED

has_common_network_relative_link = YR_UNDEFINED

local_base_path = "C:\test\a.txt"

volume_id

data = "\x00"

volume_label_offset_unicode = YR_UNDEFINED

volume_label_offset = 16

drive_serial_number = 813337217

drive_type = 3

size = 17

has_volume_id = 1

common_path_suffix_offset_unicode = YR_UNDEFINED

local_base_path_offset_unicode = YR_UNDEFINED

common_path_suffix_offset = 59

common_network_relative_link_offset = 0

local_base_path_offset = 45

volume_id_offset = 28

flags = 1

header_size = 28

size = 60

link_target_id_list

item_id_list_size = 189

number_of_item_ids = 4

item_id_list

[0]

size = 18

data = "\x1fP\xe0O\xd0 \xea:i\x10\xa2\xd8\x08\x00+00\x9d"

[1]

size = 23

data = "/C:\\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00"

[2]

size = 68

data = "1\x00\x00\x00\x00\x00,9i\xa3\x10\x00test\x00\x002\x00\x07\x00\x04\x00\xef\xbe,9e\xa3,9i\xa3&\x00\x00\x00\x03\x1e\x00\x00\x00\x00\xf5\x1e\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00t\x00e\x00s\x00t\x00\x00\x00\x14\x00"

[3]

size = 70

data = "2\x00\x00\x00\x00\x00,9i\xa3 \x00a.txt\x004\x00\x07\x00\x04\x00\xef\xbe,9i\xa3,9i\xa3&\x00\x00\x00-n\x00\x00\x00\x00\x96\x01\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00a\x00.\x00t\x00x\x00t\x00\x00\x00\x14\x00"

has_hotkey = 0

hotkey_modifier_flags = 0

hotkey = YR_UNDEFINED

hotkey_flags = 0

show_command = 1

icon_index = 0

file_attributes_flags = 32

link_flags = 524443

file_size = 0

write_time = 1221251237

access_time = 1221251237

creation_time = 1221251237

is_lnk = 1

TMPF_DEVICE = 8

TMPF_TRUETYPE = 4

TMPF_VECTOR = 2

TMPF_FIXED_PITCH = 1

TMPF_NONE = 0

FF_DECORATIVE = 80

FF_SCRIPT = 64

FF_MODERN = 48

FF_SWISS = 32

FF_ROMAN = 16

FF_DONTCARE = 0

BACKGROUND_INTENSITY = 128

BACKGROUND_RED = 64

BACKGROUND_GREEN = 32

BACKGROUND_BLUE = 16

FOREGROUND_INTENSITY = 8

FOREGROUND_RED = 4

FOREGROUND_GREEN = 2

FOREGROUND_BLUE = 1

WNNC_NET_GOOGLE = 4390912

WNNC_NET_MS_NFS = 4325376

WNNC_NET_MFILES = 4259840

WNNC_NET_RSFX = 4194304

WNNC_NET_VMWARE = 4128768

WNNC_NET_DRIVEONWEB = 4063232

WNNC_NET_ZENWORKS = 3997696

WNNC_NET_KWNP = 3932160

WNNC_NET_DFS = 3866624

WNNC_NET_AVID1 = 3801088

WNNC_NET_OPENAFS = 3735552

WNNC_NET_QUINCY = 3670016

WNNC_NET_SRT = 3604480

WNNC_NET_TERMSRV = 3538944

WNNC_NET_LOCK = 3473408

WNNC_NET_IBMAL = 3407872

WNNC_NET_SHIVA = 3342336

WNNC_NET_HOB_NFS = 3276800

WNNC_NET_MASFAX = 3211264

WNNC_NET_OBJECT_DIRE = 3145728

WNNC_NET_KNOWARE = 3080192

WNNC_NET_DAV = 3014656

WNNC_NET_EXIFS = 2949120

WNNC_NET_YAHOO = 2883584

WNNC_NET_FOXBAT = 2818048

WNNC_NET_STAC = 2752512

WNNC_NET_EXTENDNET = 2686976

WNNC_NET_3IN1 = 2555904

WNNC_NET_CSC = 2490368

WNNC_NET_RDR2SAMPLE = 2424832

WNNC_NET_TWINS = 2359296

WNNC_NET_DISTINCT = 2293760

WNNC_NET_FJ_REDIR = 2228224

WNNC_NET_PROTSTOR = 2162688

WNNC_NET_DECORB = 2097152

WNNC_NET_RIVERFRONT2 = 2031616

WNNC_NET_RIVERFRONT1 = 1966080

WNNC_NET_SERNET = 1900544

WNNC_NET_MANGOSOFT = 1835008

WNNC_NET_DOCUSPACE = 1769472

WNNC_NET_AVID = 1703936

VALID_NET_TYPE = 2

VALID_DEVICE = 1

DRIVE_RAMDISK = 6

DRIVE_CDROM = 5

DRIVE_REMOTE = 4

DRIVE_FIXED = 3

DRIVE_REMOVABLE = 2

DRIVE_NO_ROOT_DIR = 1

DRIVE_UNKNOWN = 0

COMMON_NETWORK_RELATIVE_LINK_AND_PATH_SUFFIX = 2

VOLUME_ID_AND_LOCAL_BASE_PATH = 1

HOTKEYF_ALT = 4

HOTKEYF_CONTROL = 2

HOTKEYF_SHIFT = 1

SW_SHOWMINNOACTIVE = 7

SW_SHOWMAXIMIZED = 3

SW_SHOWNORMAL = 1

FILE_ATTRIBUTE_ENCRYPTED = 16384

FILE_ATTRIBUTE_NOT_CONTENT_INDEXED = 8192

FILE_ATTRIBUTE_OFFLINE = 4096

FILE_ATTRIBUTE_COMPRESSED = 2048

FILE_ATTRIBUTE_REPARSE_POINT = 1024

FILE_ATTRIBUTE_SPARSE_FILE = 512

FILE_ATTRIBUTE_TEMPORARY = 256

FILE_ATTRIBUTE_NORMAL = 128

RESERVED_2 = 64

FILE_ATTRIBUTE_ARCHIVE = 32

FILE_ATTRIBUTE_DIRECTORY = 16

RESERVED_1 = 8

FILE_ATTRIBUTE_SYSTEM = 4

FILE_ATTRIBUTE_HIDDEN = 2

FILE_ATTRIBUTE_READONLY = 1

KEEP_LOCAL_ID_LIST_FOR_UNC_TARGET = 67108864

PREFER_ENVIRONMENT_PATH = 33554432

UNALIAS_ON_SAVE = 16777216

ALLOW_LINK_TO_LINK = 8388608

DISABLE_KNOWN_FOLDER_ALIAS = 4194304

DISABLE_KNOWN_FOLDER_TRACKING = 2097152

DISABLE_LINK_PATH_TRACKING = 1048576

ENABLE_TARGET_METADATA = 524288

FORCE_NO_LINK_TRACK = 262144

RUN_WITH_SHIM_LAYER = 131072

UNUSED_2 = 65536

NO_PIDL_ALIAS = 32768

HAS_EXP_ICON = 16384

RUN_AS_USER = 8192

HAS_DARWIN_ID = 4096

UNUSED_1 = 2048

RUN_IN_SEPARATE_PROCESS = 1024

HAS_EXP_STRING = 512

FORCE_NO_LINK_INFO = 256

IS_UNICODE = 128

HAS_ICON_LOCATION = 64

HAS_ARGUMENTS = 32

HAS_WORKING_DIR = 16

HAS_RELATIVE_PATH = 8

HAS_NAME = 4

HAS_LINK_INFO = 2

HAS_LINK_TARGET_ID_LIST = 1

test tests/data/lnk-standard

The variables printed are the ones that are set by the LNK module, in reverse order of when they have been parsed. They are a combination of fixed values (i.e. the symbolic constants defined in the LNK docs), parsed values from the LNK (either as single variables, or arrays/dictionaries), and some boolean values set about general information of the LNK.

The layer of indentation corresponds to how you can access the variable. If you want to access the file_size variable, you would do so via lnk.file_size. If you want to access number_of_item_ids, you can via lnk.link_target_id_list.number_of_item_ids.

As you can see, not all variables are set. The LNK file specification points out that many sections of the file format are optional, so don’t expect the majority of variables to be set on each LNK file you parse!

If you want detail on each of the variables parsed out/available, they are documented in docs\modules\lnk.rst (which you’ll have to build yourself or just read the .rst file in a text editor). I’ve also manually converted these docs to markdown for these blog posts (although they won’t remain up to date with any changes) which you can find here: https://bitsofbinary.github.io/yara/2023/01/05/lnk_module_documentation.html

Day 8 - Testing if a file is an LNK

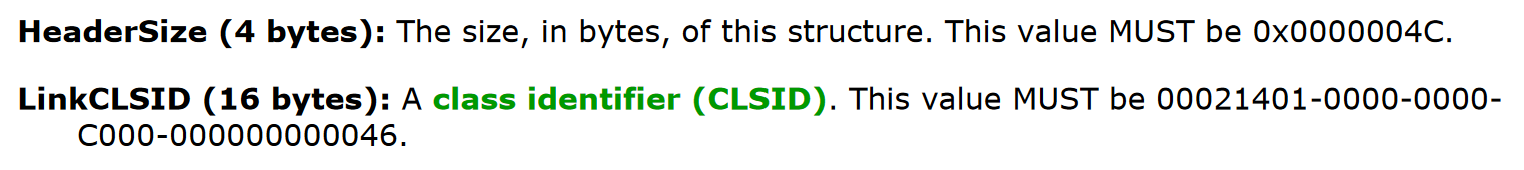

Let’s start with a straightforward rule: determining whether a file is an LNK in the first place. This is possible due to the LNK file header size and CLSID being fixed values:

If you are doing this with “pure” YARA, the rule would look like this:

rule is_lnk {

condition:

uint32(0) == 0x0000004C and

uint32(4) == 0x00021401 and

uint32(8) == 0x00000000 and

uint32(12) == 0x000000C0 and

uint32(16) == 0x46000000

}

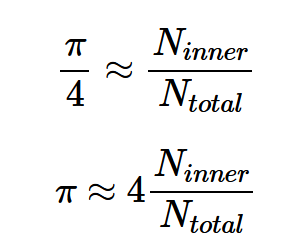

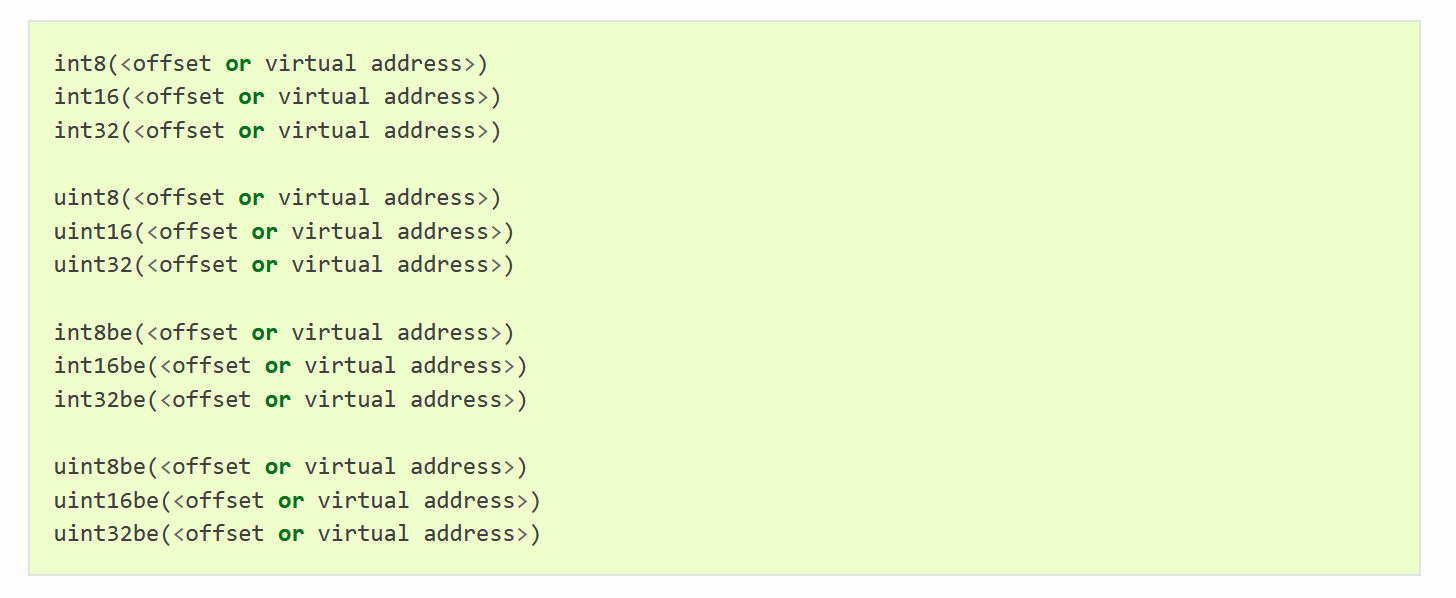

If you’re unfamiliar with the syntax used, YARA has a variety of operators to compare byte values at specific offsets in a file. These come in the form of the int and uint operators (which are both signed and unsigned respectively), and are available for 8-bit, 16-bit and 32-bit values. Later versions of YARA have also added big endian versions of these operators, so the first line of the condition of this rule could also be written as uint32be(0) == 0x4C000000.

As such, it is possible to determine whether a file is an LNK using this method, but requires you to go and read the LNK docs/keep a copy of this condition somewhere for use each time (plus it’s a little verbose if you’re replicating it across many rules).

With the LNK module, this same rule reduces to:

import "lnk"

rule is_lnk {

condition:

lnk.is_lnk

}

The lnk.is_lnk variable is a boolean value, set to 1 if the file being scanned is an LNK, and 0 if it isn’t. As such, just validating that this value is true is enough to determine whether you’re scanning an LNK or not!

(Note: you don’t need to do lnk.is_lnk == true, as it is implicitly checking if it is true)

Aside - Thoughts on file header validation

If you’ve been following #100DaysofYARA so far and seen @greglesnewich’s LNK rules, you’ll notice that he does the check uint32be(0x0) == 0x4C000000 to see if a file is an LNK.

I can’t think of any cases where this won’t be sufficient! Files starting with those 4 bytes are almost certainly going to be LNKs. It’s similar to how a lot of us will write uint16(0) == 0x5A4D to check that a file is a PE; we’re not actually checking the PE header or even validating the rest of the header itself, but just seeing the MZ string at the start is enough for us.

Checking both the header size and CLSID is a bit overkill for a rule’s condition I will admit. However, if you want the assurance of the full header being present, then I think lnk.is_lnk is a lot more concise than the five uint32 checks required.

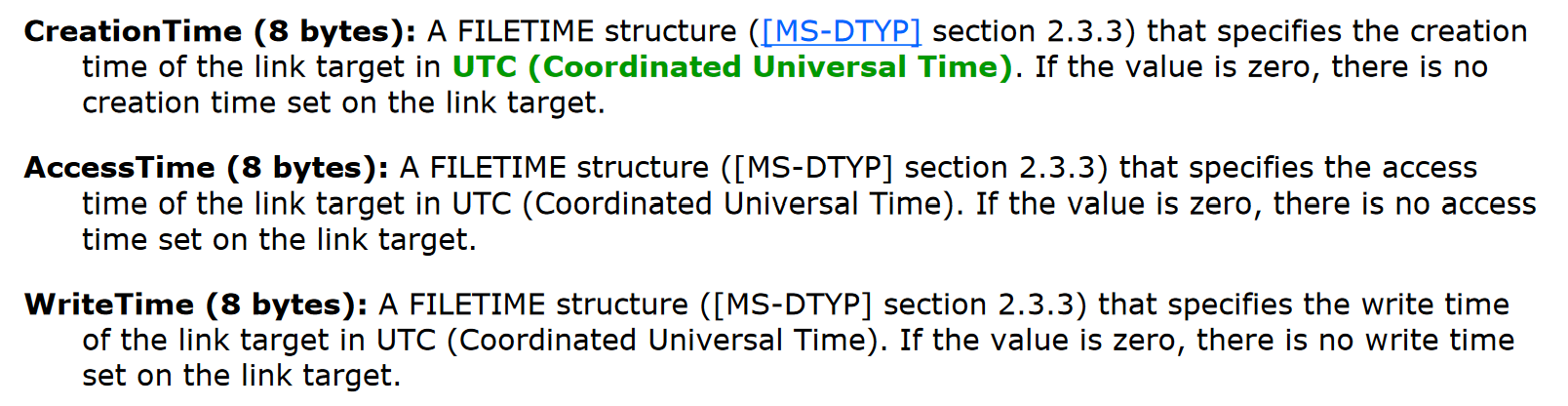

Day 9 - Checking LNK Timestamps

LNKs have three timestamps in their headers: creation time, access time, and write time. All of these are timestamps are in the FILETIME structure format, but for ease of use the LNK module converts them to Unix timestamps (e.g. to make them compatible with the time module).

As such, you can write rules based on these timestamps for a variety of purposes, such as:

- Clustering LNK files with the same timestamps

- Looking for anomalies in LNK timestamps

For example, the following rule will look for an LNK file that has supposedly been created after it has been accessed/last been written to:

import "lnk"

rule Heuristic_LNK_Created_After_Access_or_Write {

meta:

description = "Detects an LNK file with a creation timestamp later than that of its access/write timestamp"

condition:

lnk.creation_time > lnk.access_time or

lnk.creation_time > lnk.write_time

}

And here’s another one that finds LNK files that have been created in the future:

import "lnk"

import "time"

rule Heuristic_LNK_Created_in_Future {

meta:

description = "Detects LNK files with a creation timestamp in the future"

condition:

lnk.creation_time > time.now()

}

Or maybe, you want to look for LNKs where the timestamp has been removed:

import "lnk"

rule Heuristic_LNK_Empty_Timestamp {

meta:

description = "Detects an LNK file with a creation/write/access timestamp that has been zero'ed out"

condition:

lnk.creation_time == 0 or

lnk.write_time == 0 or

lnk.access_time == 0

}

This last rule can be written in pure YARA as follows:

rule Heuristic_LNK_Zeroed_Header_Timestamp {

meta:

description = "Detects an LNK file with a creation/write/access timestamp that has been zeroed out"

condition:

uint32(0) == 0x0000004C and

uint32(4) == 0x00021401 and

uint32(8) == 0x00000000 and

uint32(12) == 0x000000C0 and

uint32(16) == 0x46000000 and

(

// Creation timestamp

(

uint32(28) == 0 and uint32(32) == 0

) or

// Access timestamp

(

uint32(36) == 0 and uint32(40) == 0

) or

// Write timestamp

(

uint32(44) == 0 and uint32(48) == 0

)

)

}

This is possible to do due to the fixed offsets in the LNK header, but makes for a more verbose rule!

(EDIT: it actually looks fairly common that LNKs will have no timestamps; go figure!)

Think about timestamps in different ways

Timestamp anomalies can lead to some really interesting rules! For example, check out Costin Raiu’s slides from a presentation on writing good YARA rules, where slides 48-50 describe how it is possible to track TripleFantasy based on an impossible timestamp in a PE.

Maybe there are more possibilities to create YARA rules for LNKs based on strange timestamp features? Have a go if you’ve got an idea!

Day 10 - LNK File Attributes

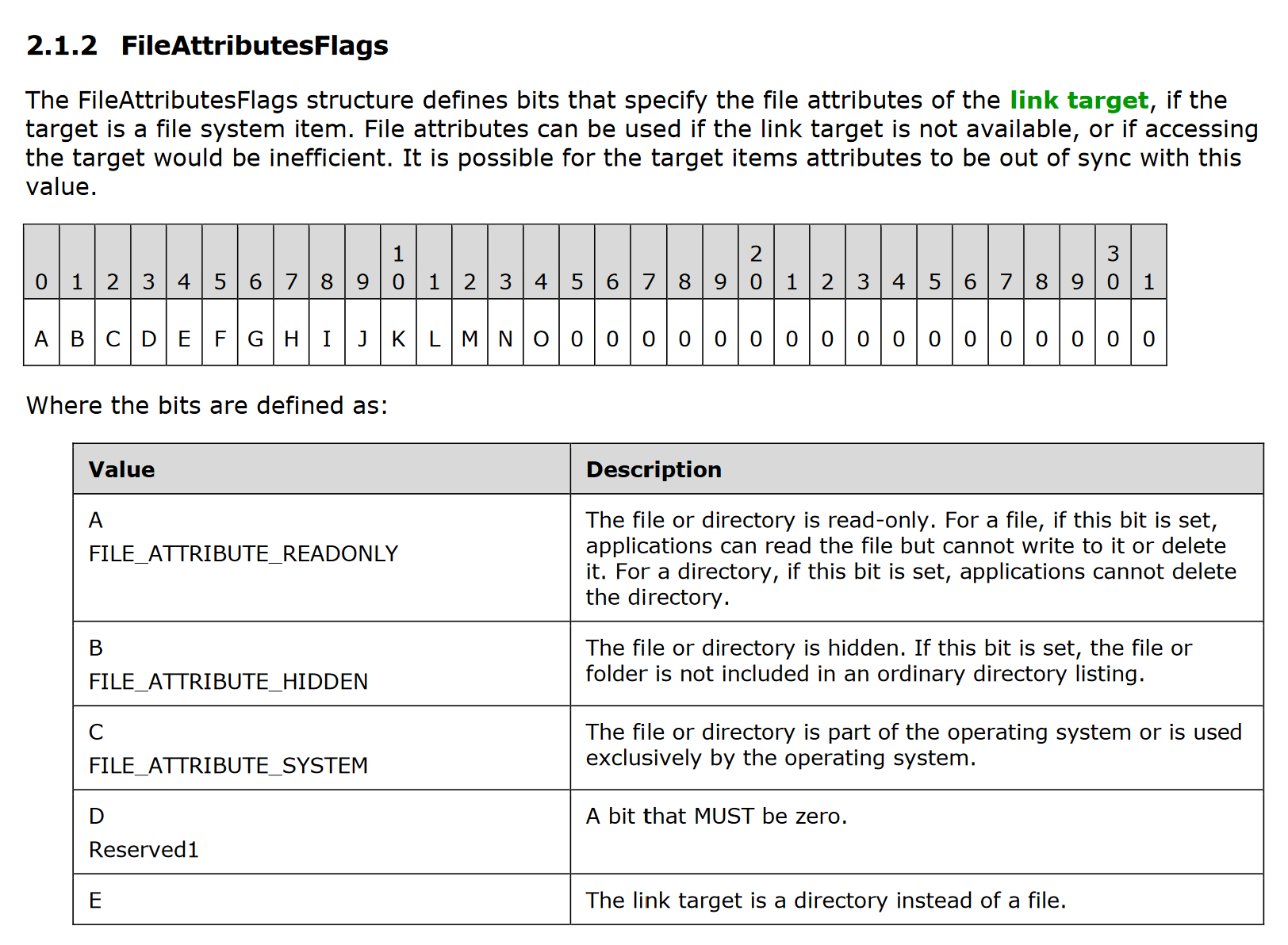

The LNK file headers (and some variables throughout the file format) have flags set with each bit corresponding to different attributes. While a lot of these are more for contextual purposes, some can tell us information about the LNK file or its target, which can be used for heuristics/anomaly detection.

For example, one of the flags present is FileAttributesFlags, which specify the attributes of the link target. An example rule could be to look for LNKs with the FILE_ATTRIBUTE_HIDDEN bit (i.e. 0x00000002) set:

import "lnk"

rule Heuristic_LNK_Hidden_Link_Target {

meta:

description = "Detects LNK files with link targets that are hidden"

condition:

lnk.file_attributes_flags & lnk.FILE_ATTRIBUTE_HIDDEN

}

You can find what all these values correspond to in yara/libyara/include/yara/lnk.h. For example, this rule would be the following in pure YARA:

rule Heuristic_LNK_Hidden_Link_Target {

meta:

description = "Detects LNK files with link targets that are hidden"

condition:

uint32(0) == 0x0000004C and

uint32(4) == 0x00021401 and

uint32(8) == 0x00000000 and

uint32(12) == 0x000000C0 and

uint32(16) == 0x46000000 and

uint32(24) & 0x00000002

}

(Note: this bit being set appears to be very common, so on it’s own may not be enough for a good hunting rule, but combined with other values could lead to some interesting ones)

Day 11 - LNK Hotkeys

While I’m not sure how much value this will have from a threat hunting perspective, LNK files can be run via keyboard shortcuts. Maybe you find a threat actor has a particular shortcut it likes? You can track that with YARA:

import "lnk"

rule Heuristic_LNK_using_Shortcut_F5 {

meta:

description = "Detects LNKs using the keyboard shortcut 'F5'"

condition:

lnk.hotkey == "F5"

}

There is also a corresponding hotkey_modifier_flags value that specifies if any/a combination of HOTKEYF_SHIFT, HOTKEYF_CONTROL, or HOTKEYF_ALT need to be pressed to activate the hotkey:

import "lnk"

rule Heuristic_LNK_using_Shift_Modifier {

meta:

description = "Detects LNKs using a keyboard shortcut, with modifier shift"

condition:

lnk.hotkey_modifier_flags & lnk.HOTKEYF_SHIFT

}

A potential way to hunt for interesting LNK samples may be to look for ones using common keyboard shortcuts for the LNK shortcut. For example, I find myself using Ctrl-C a lot to copy text, so perhaps a threat actor could abuse that to delay execution of an LNK dropped to my system until I next pressed CTRL-C?

import "lnk"

rule Heuristic_LNK_using_Hotkey_Ctrl_C {

meta:

description = "Detects LNKs using the keyboard hotkey Ctrl-C"

condition:

lnk.hotkey == "C" and

lnk.hotkey_modifier_flags & lnk.HOTKEYF_CONTROL

}

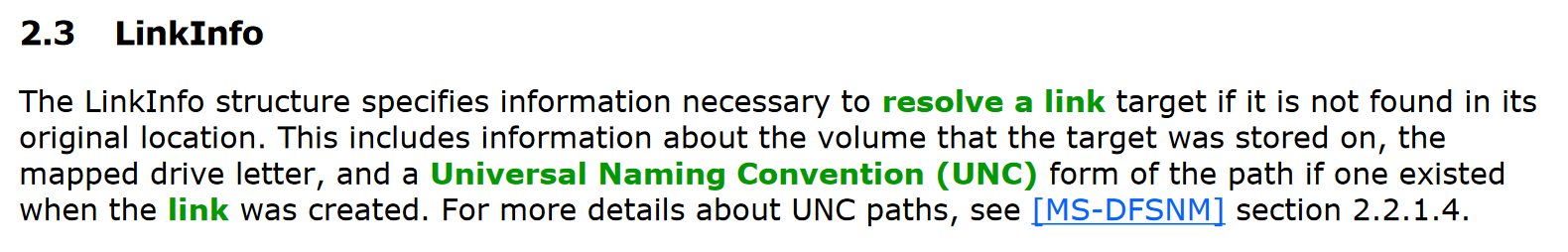

Day 12 - LNK LinkInfo - Part 1

One of the useful structures to signature in LNK files is LinkInfo. Microsoft’s description can be seen below:

The first section of this structure to focus on is the VolumeID, which specifies information about the volume that a link target was on when the link was created. This could therefore give some very useful information about the system used to generate the LNK file (which may well be a threat actor’s system).

An entry in VolumeID is the DriveType, which specifies the type of drive the link target is stored on. This could be a fixed drive, a remote drive, or even removable media or a RAM disk!

Here’s an example rule to pick up LNKs targeting files on removable media:

import "lnk"

rule Heuristic_LNK_Targeting_File_On_Removable_Media {

condition:

lnk.link_info.volume_id.drive_type & lnk.DRIVE_REMOVABLE

}

Day 13 - LNK LinkInfo - Part 2

Perhaps an even more useful component of the VolumeID structure is DriveSerialNumber, which could be used to cluster samples together generated on the same hard drive. This is one I’ve not seen used as much in clustering of LNK files so far:

import "lnk"

rule LNK_Specific_DriveSerialNumber {

condition:

lnk.link_info.volume_id.drive_serial_number == 0x307A8A81

}

It would be interesting to see how much clustering could be done with LNKs that have had other metadata stripped/modified (such as the MachineID which I’ll talk about later on).

An example rule using this value can be seen below, which detects an LNK dropping Emotet from May 2022:

import "lnk"

rule Emotet_LNK_Drive_Serial_May_2022 {

meta:

description = "Detects an LNK from May 2022 tagged as dropping Emotet based on a unique drive serial"

hash = "b7d217f13550227bb6d80d05bde26e43cd752a870973052080a72a510c444b5a"

condition:

lnk.link_info.volume_id.drive_serial_number == 0x1c853811

}

As I like to highlight, this could be done in pure YARA with the following rule:

rule Emotet_LNK_Drive_Serial_May_2022 {

meta:

description = "Detects an LNK from May 2022 tagged as dropping Emotet based on a unique drive serial"

hash = "b7d217f13550227bb6d80d05bde26e43cd752a870973052080a72a510c444b5a"

strings:

$drive_serial = {11 38 85 1c}

condition:

uint32(0) == 0x0000004c and any of them

}

The lnk.link_info.volume_id.data also contains the volume label of the drive, but I’m not sure how useful this would be for clustering purposes.

Day 14 - LNK LinkInfo - Part 3

The LocalBasePath variable which is used to construct the full path to the link item or link target can another good indicator. This variable will either point to the file you want to open with the shortcut, or to the binary you wish to execute with a shortcut (minus the command line arguments, that’s in another variable that we’ll get to later). For example, looking for a specific path:

import "lnk"

rule LNK_LocalBasePath_Example {

condition:

lnk.link_info.local_base_path == "C:\\test\\a.txt"

}

Or, maybe for more heuristic rules:

import "lnk"

rule Heuristic_LNK_LocalBasePath_in_TEMP {

meta:

description = "Detects LNK files with a local base path pointing at the %TEMP% folder"

condition:

lnk.link_info.local_base_path icontains "TEMP"

}

Generally, if you’re looking for execution of non-standard binaries via LNKs, this variable will be useful for you, e.g.:

import "lnk"

rule Heuristic_LNK_LocalBasePath_mshta {

meta:

description = "Detects LNK files pointing at mshta"

condition:

lnk.link_info.local_base_path icontains "mshta"

}

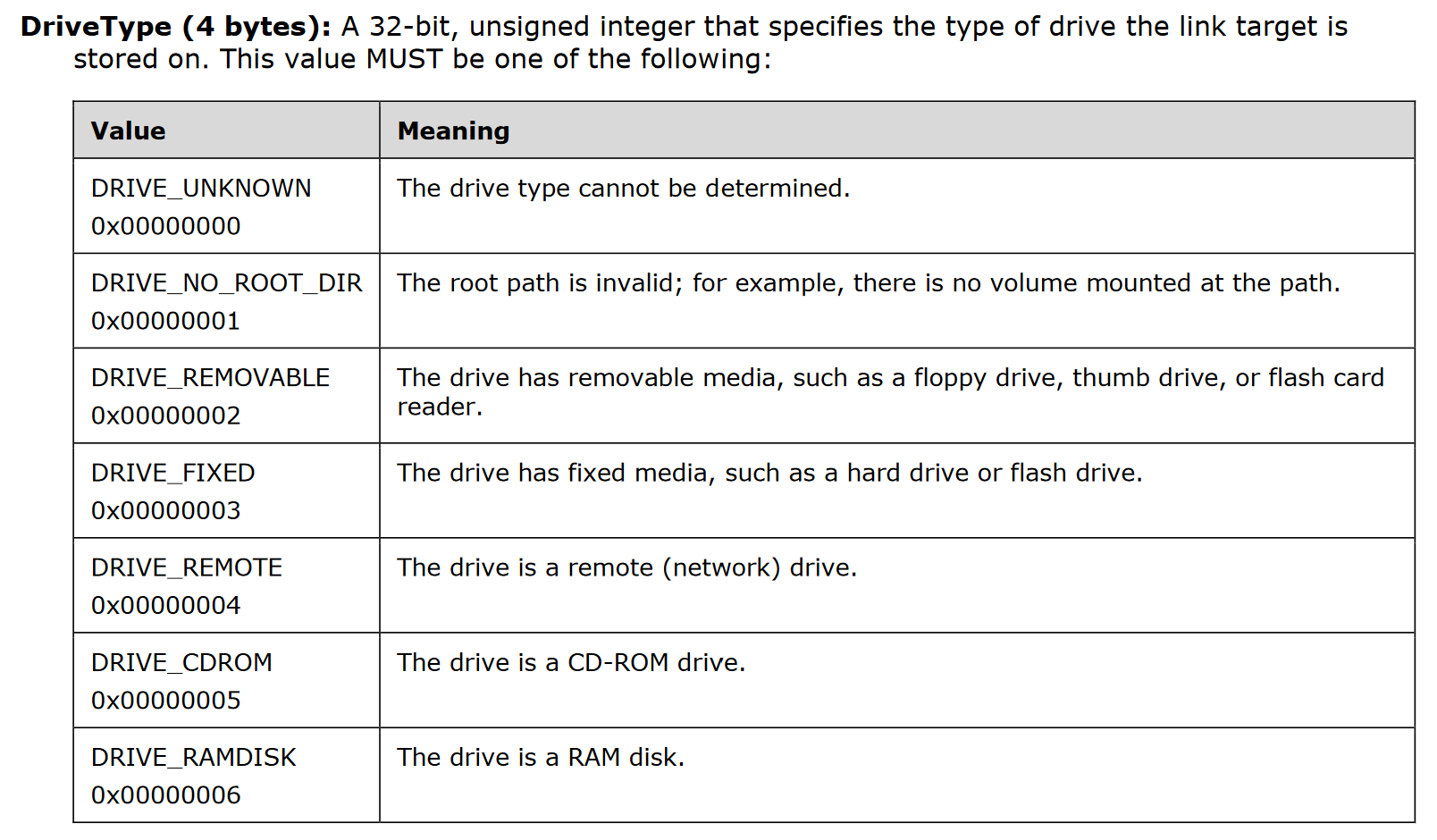

Day 15 - LNK LinkInfo - Part 4

In reading the LNK documentation, all kinds of structures can be seen that are otherwise uncommon, but may be useful in more niche circumstances! For example, the CommonNetworkRelativeLink is a structure present when an LNK points to a file on a network share.

While I’ve not seen this structure in practice for malicious LNKs myself, this could still be useful for hunting purposes to find suspicious LNKs:

import "lnk"

rule Heuristic_LNK_Pointing_to_Network_Share {

meta:

description = "Detects an LNK pointing to the network share '\\\\server\\share'"

condition:

lnk.link_info.common_network_relative_link.net_name == "\\\\server\\share"

}

Day 16 - LNK StringData - Part 1

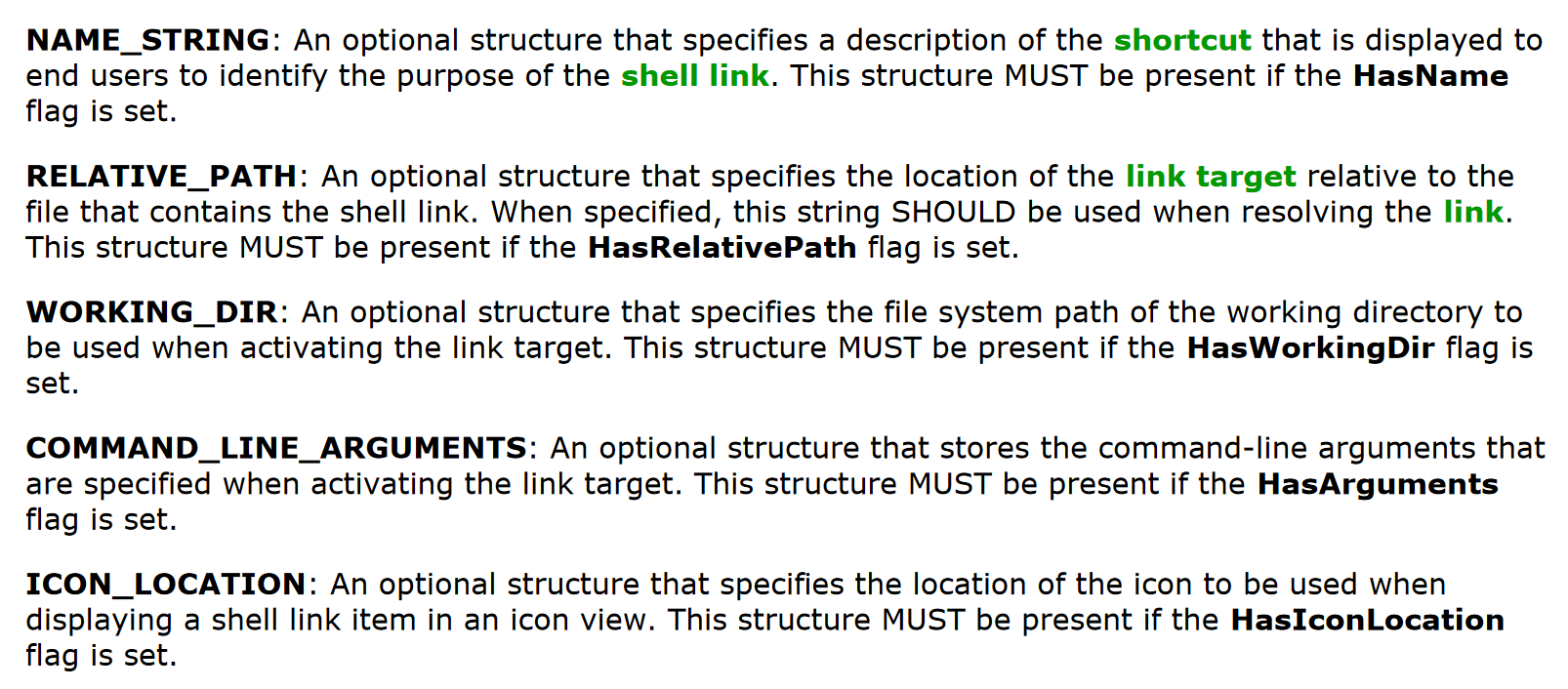

Now that we’ve looked through LinkInfo, we can move to the next optional section of LNK files: StringData. This optional set of structures can contain five different entries:

NAME_STRINGRELATIVE_PATHWORKING_DIRCOMMAND_LINE_ARGUMENTSICON_LOCATION

It’s worth spending a bit of time on these structures, as they can contain some useful information. I’ll spend the next couple of posts going into detail on these.

To start off with, the NAME_STRING entry is perhaps the least useful of the five from a detection/threat hunting perspective, unless you want to hunt for LNKs that have it set to spoof some specific software, for example:

import "lnk"

rule LNK_With_WinRAR_Description {

meta:

description = "Detects LNK files with a description matching that of the WinRAR"

condition:

// Process RAR, ZIP and other archive formats

lnk.name_string == "P\x00r\x00o\x00c\x00e\x00s\x00s\x00 \x00R\x00A\x00R\x00,\x00 \x00Z\x00I\x00P\x00 \x00a\x00n\x00d\x00 \x00o\x00t\x00h\x00e\x00r\x00 \x00a\x00r\x00c\x00h\x00i\x00v\x00e\x00 \x00f\x00o\x00r\x00m\x00a\x00t\x00s\x00"

}

Day 17 - LNK StringData - Part 2

The RELATIVE_PATH, WORKING_DIR and COMMAND_LINE_ARGUMENTS are all somewhat related StringData entries. That is, they collectively describe the filename, directory, and command line arguments for the file the LNK is targeting. These can lead to a lot of useful data points from a detection perspective; particularly COMMAND_LINE_ARGUMENTS.

In fact, a lot of current open source YARA rules implicitly use these fields, even if they don’t target them directly. Bart’s LNK ruleset contains rules looking for references to scripting languages (i.e. in the command line), long relative paths, and references to binaries used to execute files (such as rundll32.exe).

Many different types of YARA rules for LNKs are possible without the LNK module, and that’s a good thing! However, I hope that these fields being exposed directly through a YARA module will allow us all to be much more specific in the types of rules we want to write.

So for instance, if we want to look for /c in a command line argument of an LNK file (i.e. to run them command then terminate the instance of cmd.exe), this is currently a bit harder to specify without it potentially being more false positive-prone (and having some performance concerns):

rule Heuristic_LNK_Slash_c_In_Command_Line {

meta:

description = "Detects LNK files that have '/c' in its command line"

strings:

$ = "/c" ascii wide

condition:

uint32be(0) == 0x4C000000 and

uint32be(4) == 0x01140200 and

uint32be(8) == 0x00000000 and

uint32be(12) == 0xC0000000 and

uint32be(16) == 0x00000046 and

any of them

}

However, using the LNK module, we can be more precise, and hopefully avoid looking in large files for all occurances of /c:

import "lnk"

rule Heuristic_LNK_Slash_c_In_Command_Line {

meta:

description = "Detects LNK files that have '/c' in its command line"

condition:

lnk.command_line_arguments contains "/\\x00c"

}

Asides

- The benefit of using the LNK module is we can drop the condition using all the

uint32bestatements checking whether a file is an LNK, as the module will guarantee that the file is an LNK if the fieldlnk.command_line_argumentsis present - At the moment you can see an annoying design of LNKs in that the

StringDatafields are either unicode, or whatever the default code page of the system the LNK is generated on is. This means we have to include null characters in the string to check. While I’d like the module to convert these fields to ASCII, this could lose information that might otherwise be important. Let me know what you think about having something like alnk.command_line_arguments_asciifield. Potentially another solution would be to add support to YARA for wide character string comparisons, such aslnk.command_line_arguments contains L"/c"(Lis the default syntax used to define wide character strings in C).

Edit: I’ve added an issue to YARA to add L"" as a modifier: https://github.com/VirusTotal/yara/issues/1863

Day 18 - LNK StringData - Part 3

Last but not least in the StringData section is ICON_LOCATION, which specifies the location of the icon to be used when displaying a shell link item in an icon view. This structure has an interesting case study I observed in some research I conducted into APT41 as part of my job.

In some LNK files related to the threat actor, it used icon locations such as .\1.pdf and .\1.doc. While these icon locations may not point to a real file on the system, this value being present will set the icon for the LNK file being that of either a PDF or Microsoft Office file respectively. This can make the LNK file look more convincing.

I shared the following open source YARA rule for this technique:

rule APT41_Icon_Location_LNK : Red_Kelpie

{

meta:

description = "Detects LNK files masquerading as PDFs likely used by APT41"

TLP = "WHITE"

author = "PwC Cyber Threat Operations :: BitsOfBinary"

copyright = "Copyright PwC UK 2021 (C)"

license = "Apache License, Version 2.0"

created_date = "2021-08-26"

modified_date = "2021-08-26"

revision = "0"

hash = "2218904238dc4f8bb5bb838ed4fa779f7873814d7711a28ba59603826ae020aa"

hash = "5904bc90aec64b12caa5d352199bd4ec2f5a3a9ac0a08adf954689a58eff3f2a"

hash = "c98ac83685cb5f7f72e832998fec753910e77d1b8eee638acb508252912f6cf6"

hash = "a44b35f376f6e493580c988cd697e8a2d64c82ab665dfd100115fb6f700bb82a"

strings:

$pdf = ".\\1.pdf" ascii wide

$doc = ".\\1.doc" ascii wide

condition:

uint32be(0) == 0x4C000000 and

uint32be(4) == 0x01140200 and

uint32be(8) == 0x00000000 and

uint32be(12) == 0xC0000000 and

uint32be(16) == 0x00000046 and

any of them

}

While in hindsight I don’t believe that all samples with these icon locations are related to APT41 (hence the fortunate use of likely in the rule description), this rule has generally been useful in finding malicious LNK files masquerading as either PDFs or Word documents.

If you wanted to recreate this rule with the LNK module, you could do it as such:

import "lnk"

rule Heuristic_LNK_Icon_Location_Masquerading_as_Doc_or_PDF {

condition:

lnk.icon_location contains ".\\x00\\\\x001\\x00.\\x00p\\x00d\\x00f" or

lnk.icon_location contains ".\\x00\\\\x001\\x00.\\x00d\\x00o\\x00c"

}

No strings: section needed here.

If you’re interested in this research into APT41, you can find the slides, full set of YARA rules, and link to the recording here: https://github.com/PwCUK-CTO/TheSAS2021-Red-Kelpie/blob/main/yara/red_kelpie.yar

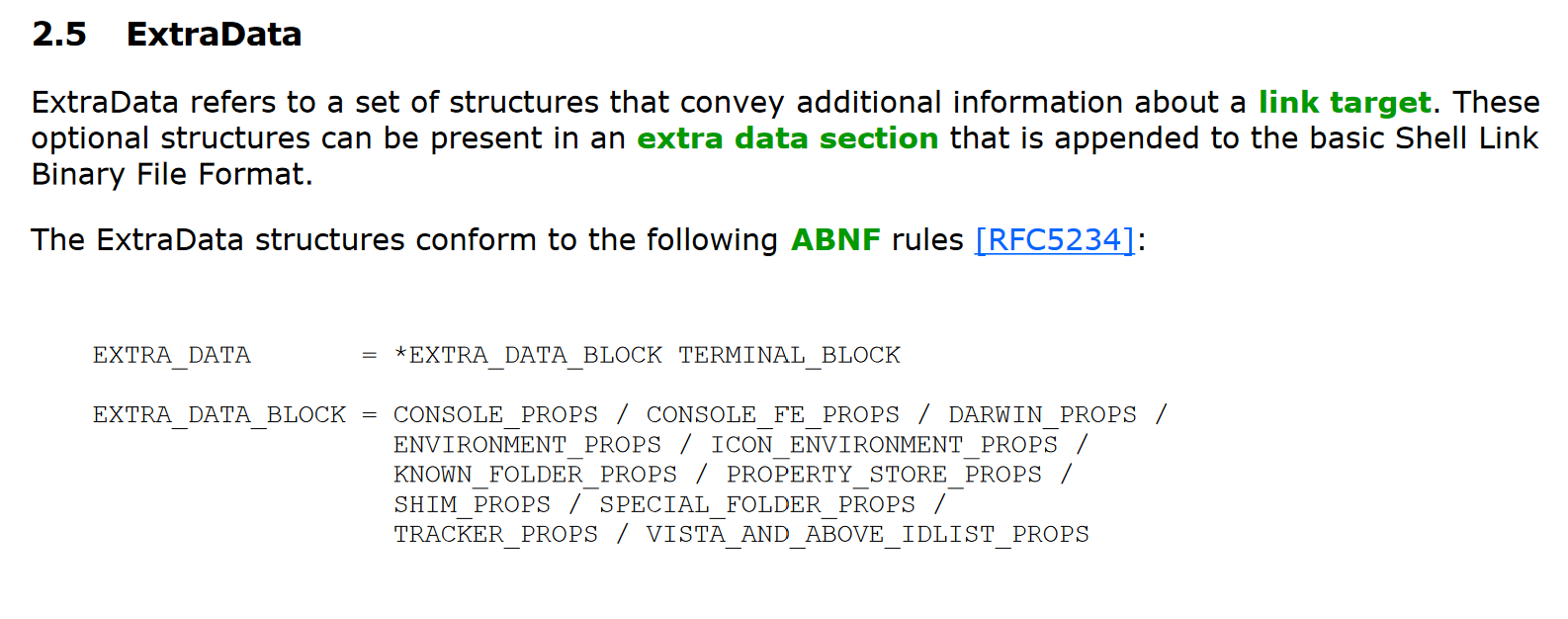

Day 19 - LNK ExtraData

The next few posts will focus on the final set of optional structures in LNK files, which are in the ExtraData section.

As you can see, these consist of 11 different structures, which I’ll list below with a description from the LNK docs:

CONSOLE_PROPS: specifies the display settings to use when a link target specifies an application that is run in a console window.CONSOLE_FE_PROPS: specifies the code page to use for displaying text when a link target specifies an application that is run in a console window.DARWIN_PROPS: specifies an application identifier that can be used instead of a link target IDList to install an application when a shell link is activated.ENVIRONMENT_PROPS: specifies a path to environment variable information when the link target refers to a location that has a corresponding environment variable.ICON_ENVIRONMENT_PROPS: specifies the path to an icon. The path is encoded using environment variables, which makes it possible to find the icon across machines where the locations vary but are expressed using environment variables.KNOWN_FOLDER_PROPS: specifies the location of a known folder. This data can be used when a link target is a known folder to keep track of the folder so that the link target IDList can be translated when the link is loaded.PROPERTY_STORE_PROPS: specifies a set of properties that can be used by applications to store extra data in the shell link.SHIM_PROPS: specifies the name of a shim that can be applied when activating a link target.SPECIAL_FOLDER_PROPS: specifies the location of a special folder. This data can be used when a link target is a special folder to keep track of the folder, so that the link target IDList can be translated when the link is loaded.TRACKER_PROPS: specifies data that can be used to resolve a link target if it is not found in its original location when the link is resolved. This data is passed to the Link Tracking service to find the link target.VISTA_AND_ABOVE_IDLIST_PROPS: specifies an alternate IDList that can be used instead of the LinkTargetIDList structure (section 2.2) on platforms that support it.

As such, there is a lot of possibilities for detection rules based on these structures! Let’s start looking into those in the next posts.

Day 20 - LNK CONSOLE_PROPS - Part 1

The LNK CONSOLE_PROPS gives information on how to display the LNK to the user. @greglesnewich has done a really cool Jupyter notebook used to analyse and write some YARA rules based on this section. In particular, he has written a rule that will look for LNKs that have set both WindowSizeX and WindowSizeY to 1 pixel, and ScreenBufferSizeX and ScreenBufferSizeY to also be of size 1, and done it using pure YARA (i.e. no modules involved!).

rule SUSP_LNK_SmallScreenSize

{

meta:

author = "Greg Lesnewich"

description = "check for LNKs that have a screen buffer size and WindowSize dimensions of 1x1"

date = "2023-01-01"

version = "1.0"

DaysofYARA = "1/100"

strings:

$dimensions = {02 00 00 A0 ?? 00 ?? ?? 01 00 01 00 01}

// struct ConsoleDataBlock sConsoleDataBlock

// uint32 Size

// uint32 Signature

// enum FillAttributes

// enum PopupFillAttributes

// uint16 ScreenBufferSizeX

// uint16 ScreenBufferSizeY

// uint16 WindowSizeX

// uint16 WindowSizeY

condition:

uint32be(0x0) == 0x4c000000 and all of them

}

I love this rule, as it shows how capable YARA is without even needing anything extra. Greg is using the fact that the ConsoleDataBlock structure has a fixed BlockSignature of 0xA0000002 to “parse” out the relevant variables in a hex string (using wildcards to skip the ones he doesn’t care about). No LNK module needed!

So why do I still think the LNK module is useful? First, this approach will not always be possible in LNKs using pure YARA (we can fortunately rely on BlockSignature values here to find the right structure). Second, even when it’s possible, it requires some understanding enough about the YARA syntax (as Greg clearly does) to write a rule to take advantage of this!

Creating an LNK with CONSOLE_PROPS

Not all LNKs come with with ConsoleDataBlock structure, so if we want to experiment with values we’ll need to be able to generate them! The best way I’ve found to do this on Windows 11 is:

- Create shortcut link for

cmd.exe - Right click on the shortcut and go to

Properties - Go on the

Layouttab - Edit the

Screen buffer sizeandWindow sizevalues to all be1

![]()

In the next post we’ll recreate Greg’s rule using the LNK module.

Day 21 - LNK CONSOLE_PROPS - Part 2

If we dump the module information using the -D flag of the LNK generated in the previous post, we’ll see the following for the CONSOLE_PROPS section:

console_data

color_table

[0] = 789516

[1] = 14300928

[2] = 958739

[3] = 14521914

[4] = 2035653

[5] = 9967496

[6] = 40129

[7] = 13421772

[8] = 7763574

[9] = 16742459

[10] = 837142

[11] = 14079585

[12] = 5654759

[13] = 10354868

[14] = 10875385

[15] = 15921906

history_no_dup = 0

number_of_history_buffers = 4

history_buffer_size = 50

auto_position = 1

insert_mode = 1

quick_edit = 1

full_screen = 0

cursor_size = 25

face_name = "C\x00o\x00n\x00s\x00o"

font_weight = 400

font_family = 54

font_size = 1048576

window_origin_y = 0

window_origin_x = 0

window_size_y = 1

window_size_x = 1

screen_buffer_size_y = 1

screen_buffer_size_x = 1

popup_fill_attributes = 245

fill_attributes = 7

block_signature = 2684354562

block_size = 204

has_console_data = 1

As always, I’d recommend reading through the LNK documentation to make sense of each field (the docs I’ve generated for the LNK module will also give details on each of these). But we’re going to focus on recreating Greg’s rule highlighted in the previous post.

The fields of interest here are window_size_x, window_size_y, screen_buffer_size_x and screen_buffer_size_y, all of which are set to 1. Therefore, we can recreate Greg’s rule using the LNK module as follows:

import "lnk"

rule Heuristic_LNK_SmallScreenSize {

meta:

description = "Adaptation of @greglesnewich's rule to find LNKs with a 1x1 console size"

condition:

lnk.console_data.window_size_x == 1 and

lnk.console_data.window_size_y == 1 and

lnk.console_data.screen_buffer_size_x == 1 and

lnk.console_data.screen_buffer_size_y == 1

}

Hopefully the LNK module makes this rule both more readable (Greg did a great job commenting his rule to be clear), and more accessible to those who don’t want to go and comb through the LNK documentation like Greg and I both have!

What else can we do?

As can be seen in the data available, there are lots of different variables in this structure, including data to do with fonts, number of buffers available, etc.

Maybe we could also write a rule based on the window origin? I realised when re-reading the docs that the following variables in the CONSOLE_PROPS section are treated as signed integers:

ScreenBufferSizeX;ScreenBufferSizeY;WindowSizeX;WindowSizeY;WindowOriginX;WindowOriginY;

When manually editing the properties of the cmd.exe LNK, I could only get the window origin values to go down to -5. However, it still may be worth looking for LNKs that have a negative size window origin (maybe in an attempt to hide the LNK off the side of the main window?):

import "lnk"

rule Heuristic_LNK_Negative_Window_Origin {

meta:

description = "Detects LNKs that have a negative value window origin location"

condition:

lnk.console_data.window_origin_x < 0 and

lnk.console_data.window_origin_y < 0

}

There’s no doubt that there are more possibilities for detections/clustering of LNKs based on the CONSOLE_PROPS values, so I’d be interested to see what people come up with based on the data available!

Day 22 - LNK CONSOLE_FE_PROPS

As you can hopefully see already, these ExtraData structure can provide a lot of useful data to work with from a threat hunting perspective.

Take the next section, CONSOLE_FE_PROPS for example. While this structure only really has one variable of interest, the CodePage which specifies a code page language code identifier may give away a crucial piece of information from an attribution/clustering perspective about the threat actor that generated it! I haven’t come across this structure in practice yet, but it could prove to be a useful field to write some heuristic rules based on different code page values.

If you would like to hunt for different LNKs with different ExtraData structures, each structure has a boolean variable that will indicate whether it is present or not. For example, lnk.has_console_fe_data will be set if the CONSOLE_FE_PROPS structure is in the LNK you are targeting.

import "lnk"

rule Heuristic_LNK_With_ConsoleFEData {

meta:

description = "Detects LNK with ConsoleFEData structure"

condition:

lnk.has_console_fe_data

}

Day 23 - LNK TRACKER_PROPS - Part 1

An ExtraData section I want to focus on is TRACKER_PROPS; one which people who have tried writing YARA rules for LNKs have likely come up against before. The structure specifies data that can be used to resolve a link target if it is not found in its original location when the link is resolved. In particular, the field MachineID which specifies the NetBIOS name of the machine where the link target was last known to reside can be a very valuable IoC for writing rules for LNKs.

The NetBIOS name is used as a human-friendly way of identifying Windows devices on a network. You can find out what you own NetBIOS name is by running nbtstat -n.

Luckily for us threat hunters, this field is left in by default in a lot of LNK files. This means that if a threat actor generates multiple LNK files from the same Windows instance (whether a physical device or a VM), this MachineID will be consistent across them if left unedited.

I’ve used this value several times to cluster LNK files together. For an example of this, let’s take an LNK from the IoCs of a Palo Alto blog on Trident Ursa (more commonly known as Gamaredon Group) and write a rule based on the MachineID:

import "lnk"

rule TridentUrsa_LNK_Machine_ID {

meta:

description = "Rule to pick up LNKs used by Gamaredon Group/Trident Ursa based on a unique MachineID"

hash = "f119cc4cb5a7972bdc80548982b2b63fac5b48d5fce1517270db67c858e9e8b0"

reference = "https://unit42.paloaltonetworks.com/trident-ursa/"

reference = "https://github.com/pan-unit42/iocs/blob/master/Gamaredon/Gamaredon_IoCs_DEC2022.txt"

condition:

lnk.tracker_data.machine_id == "desktop-farl139"

}

Of course, this rule can also be written without the LNK module by relying just on strings. In a way, this is preferable, as you can hunt for this MachineID for LNKs attached to emails, dropped via HTML pages, etc.:

rule TridentUrsa_LNK_Machine_ID {

meta:

description = "Rule to pick up LNKs used by Gamaredon Group/Trident Ursa based on a unique MachineID"

hash = "f119cc4cb5a7972bdc80548982b2b63fac5b48d5fce1517270db67c858e9e8b0"

reference = "https://unit42.paloaltonetworks.com/trident-ursa/"

reference = "https://github.com/pan-unit42/iocs/blob/master/Gamaredon/Gamaredon_IoCs_DEC2022.txt"

strings:

$ = "desktop-farl139"

condition:

any of them

}

Day 24 - LNK TRACKER_PROPS - Part 2

While I think the MachineID is the most useful component of the TRACKER_PROPS, it isn’t the only one we can use! There are two further variables called Droid and DroidBirth in this structure, which reprsent two GUID values to used to find the link target with the Link Tracking service.

I find the LNK documentation to be rather lacking in explaining how these GUIDs actually work/what they represent… Eric Zimmerman’s LECmd tool parses these values out as VolumeDroid and FileDroid, which maybe gives a bit more context, although these values aren’t specified in the LNK documentation.

Maybe these values are more well know to those who conduct forensics; but despite this, it is yet another value that could be useful in clustering LNK files together! (e.g. lnk.tracker_data.droid_volume_identifier == "\\x40\\x78\\xC7\\x94\\x47\\xFA\\xC7\\x46\\xB3\\x56\\x5C\\x2D\\xC6\\xB6\\xD1\\x15")

I elected in the LNK module to use the terms of droid_volume and droid_file to align with the LNK parsers, as I think these values are easier to reference.

So taking that example Trident Ursa LNK from the previous post, we can also write a YARA rule for its Droid values as well:

import "lnk"

rule TridentUrsa_LNK_Droid_Values {

meta:

description = "Rule to pick up LNKs used by Gamaredon Group/Trident Ursa based on unique Droid GUIDs"

hash = "f119cc4cb5a7972bdc80548982b2b63fac5b48d5fce1517270db67c858e9e8b0"

reference = "https://unit42.paloaltonetworks.com/trident-ursa/"

reference = "https://github.com/pan-unit42/iocs/blob/master/Gamaredon/Gamaredon_IoCs_DEC2022.txt"

condition:

lnk.tracker_data.droid_birth_file_identifier == "\xc6\x1f\x17\xb9\xcc\x07\xeb\x11\xb4#\x08\x00'.\x05\x1d" or

lnk.tracker_data.droid_birth_volume_identifier == "By\x013NQ\xa0G\xa1\xe0v\x7fh\xb9N\xa1" or

lnk.tracker_data.droid_file_identifier == "\xc6\x1f\x17\xb9\xcc\x07\xeb\x11\xb4#\x08\x00'.\x05\x1d" or

lnk.tracker_data.droid_volume_identifier == "By\x013NQ\xa0G\xa1\xe0v\x7fh\xb9N\xa1"

}

Day 25 - LNK Module Concessions

I’ve already raised this point, but to address something worth talking about, the LNK module isn’t always needed for the types of rules I’m showing. For example, you may have asked yourselves that for the two previous posts: “Why do I need the LNK module to write these LNK rules? Can’t I just use pure YARA?”. And you would be right! Here are the same two rules from the previous posts, but without needing the LNK module:

rule TridentUrsa_LNK_Machine_ID {

meta:

description = "Rule to pick up LNKs used by Gamaredon Group/Trident Ursa based on a unique MachineID"

hash = "f119cc4cb5a7972bdc80548982b2b63fac5b48d5fce1517270db67c858e9e8b0"

reference = "https://unit42.paloaltonetworks.com/trident-ursa/"

reference = "https://github.com/pan-unit42/iocs/blob/master/Gamaredon/Gamaredon_IoCs_DEC2022.txt"

strings:

$ = "desktop-farl139"

condition:

any of them

}

rule TridentUrsa_LNK_Droid_Values {

meta:

description = "Rule to pick up LNKs used by Gamaredon Group/Trident Ursa based on unique Droid GUIDs"

hash = "f119cc4cb5a7972bdc80548982b2b63fac5b48d5fce1517270db67c858e9e8b0"

reference = "https://unit42.paloaltonetworks.com/trident-ursa/"

reference = "https://github.com/pan-unit42/iocs/blob/master/Gamaredon/Gamaredon_IoCs_DEC2022.txt"

strings:

$file_identifier = {c6 1f 17 b9 cc 07 eb 11 b4 23 08 00 27 2e 05 1d}

$volume_identifier = {42 79 01 33 4e 51 a0 47 a1 e0 76 7f 68 b9 4e a1}

condition:

any of them

}

These rules will pick up the same samples as the ones using the LNK module. In some ways you could argue that they’re even simpler:

- They don’t need to import any modules

- They can rely purely on strings

- If you don’t care about validating it’s an LNK, you can have much simpler condition

So why go to all the bother of writing a module for LNKs? Because I think that there is a lot of power in being able to manipulate these variables yourself.

For example, if you wanted to write a rule looking for LNKs with a MachineID that doesn’t start with desktop-, how would you do that using pure YARA? It’s not so straightforward, whereas the LNK module means you can do this:

import "lnk"

rule Heuristic_LNK_MachineID_Not_Starting_with_desktop {

meta:

description = "Detects LNK files that have a MachineID that doesn't start with 'desktop-'"

condition:

not lnk.tracker_data.machine_id startswith "desktop-"

}

The LNK module won’t always be ideal for your use case; but with the data parsed and served to you for you to work with and write rules on, I think it can give you a lot of flexibility to writing rules of your liking.

Day 26 - LNK Overlay

Capability I wanted to add to the LNK module was to allow for the creation of rules depending on if data is appended to an LNK! As such, there are two variables added:

lnk.has_overlay- A boolean value that is true if the LNK has extra data appended to itlnk.overlay_offset- An unsigned integer representing the offset into the LNK file of where the overlay starts (only set if the has_overlay flag is true)

This means it is possible to write rules based on looking for data from the end of an LNK file, such as the following:

import "lnk"

rule Heuristic_LNK_with_PE_Appended {

meta:

description = "Detects an LNK file that has had a PE file appended to it"

condition:

uint16(lnk.overlay_offset) == 0x5A4D

}

While you could equally look for strings like This program cannot be run in DOS mode in an LNK to try and find embedded PE files, these variables allow you to be more precise. For example, if you wanted to look for LNKs that have encrypted data appended to them, you could use a rule like this:

import "lnk"

import "math"

rule Heuristic_LNK_with_High_Entropy_Data_Appended {

meta:

description = "Detects LNK files appended with high entropy data (i.e. likely encrypted data)"

condition:

math.entropy(lnk.overlay_offset, filesize - lnk.overlay_offset) > 7.9

}

Day 27 - Malformed LNK Detection

A more experimental field I’ve added to the LNK module is lnk.is_malformed. This is a boolean field set if the LNK module fails to parse a file that otherwise has a valid LNK header.

Something that I learnt the hard way through writing the C code for the LNK module is about not trusting the data that is used as an input! If you assume a field is a particular size (either through a fixed length, or through parsing a field), then you can run into all kinds of vulnerabilities that could be exploited.

So the main purpose of this field is that if an LNK were designed to purposefully try to cause exceptions in a parser, this is something I’d want to catch; both from the perspective of making the code fail gracefully, but also from a potential detection standpoint! The dream would be that this variable is able to find an LNK exploiting some kind of vulnerability in a parser (I’m realistic in my expectations that this won’t happen, but I can dream).

The reality is that it’ll almost certainly catch either broken LNKs, or just be a useful way of finding edge cases that the LNK module doesn’t handle correctly.

Anyway, here’s a rule using this variable:

import "lnk"

rule Heuristic_Malformed_LNK {

meta:

description = "Detects a malformed LNK"

condition:

lnk.is_malformed

}

Day 28 - Future Considerations for the LNK Module

Like any of the YARA modules, I don’t see the LNK module as being “complete”. There have been some fantastic additions to the PE module over the years which create many more opportunities for detection, so there’s always more we can add.

First up, I would like for the LNK module to be merged into YARA. This may be a big undertaking in of itself; I wouldn’t want it to get merged in without proper review, as to not introduce any vulnerabilities, and to make sure that all the variables are laid out sensibly. However, if it gets merged in I will be happy. If it gets merged in, and available as a default module, I will be very happy.

So what is there to build on top of the LNK module? To start with, there needs to be a little more work done to parse all structures. For example, lnk.property_store_data has not had a parser written up for it yet, so that would be next on my todo list.

There are some structures in LNKs that I don’t fully understand yet. For example, the IDList structures aren’t that descriptively documented in the LNK documentation, but look to hold some useful data that we could parse for detection purposes.

Several of the parsed fields are awkward to use in their current format, such as the StringData values which are in unicode. I’d like to figure out how better to surface those.

And in general, I’d like to research what other variables would be useful to create in the LNK module. My motivation for this module was to make it as useful as possible for analysts trying to signature LNKs; if there’s something you’d want added to make your life easier, I would love to hear about it!

Day 29 - Combining the LNK Module with the Console Module

One of my earlier posts highlighted that you could print out all the variables parsed by the LNK module with the -D flag. This is really useful for getting a complete picture of a file, but would potentially need extra parsing to get the values you want.

Say you had a large set of LNK files and you wanted to get all the MachineID values out of them. Dumping all the information for every sample would be a bit excessive, and you may not want to dig out an LNK parser from elsewhere to work with.

With YARA’s recent Console module addition, this task becomes a lot more straightforward! In short, the Console module will log messages to stdout via the console.log() API. There are several different formats you can apply to this function, which involve either just printing strings, or printing messages alongside what you print. console.log() will also always evaluate to try, so you can insert it into rules without the fear of breaking their conditions.

So, to print all the MachineID values of a set of LNKs, we can use the following rule:

import "lnk"

import "console"

rule LNK_MachineID {

condition:

lnk.is_lnk and

console.log("MachineID: ", lnk.tracker_data.machine_id)

}

You’ll need to do a little extra processing to get the results in a nice format (e.g. in this case using grep "MachineID: " to only parse out the MachineID values rather than the rule results), but hopefully this shows that YARA can be used for quick/straightforward file processing if you want it to!

Day 30 - Other Module Ideas

I’ve spent many posts now describing the capability of the LNK module; a module which I believe will make YARA more versatile. So what other modules would be good ideas to develop/include in YARA? Here are a few ideas:

- ZIP module

- RAR module

- ISO module

- Assembler module

- File identification module

ZIP/RAR module

This may be a bit misleading, as I don’t think YARA should be designed to decompress archive files. If you write YARA rules frequently, you’ve probably come up against the frustration of not being able to see in archives; but I think that’s a limitation we have to accept. It would remove a lot of the efficiency of YARA to start doing decompression.

However, a module to parse the metadata/file structure of these archives might be something very useful! That would open up possibilities to do some nice heuristics on compressed files without having to decompress them.

ISO module

Same reasoning as the ZIP/RAR module ideas, but highlighting this separately given the increased use of ISO files by threat actors!

Assembler module

This is a bit out there, but what if we could write rules using assembly language instead of hex bytes? Maybe this could make rules more readable? Maybe it could allow YARA to more easily convert bytes to assembly, and back again? Maybe it’s a silly idea? Sometimes we won’t know the answers unless we try!

File identification module

If you’re a malware analyst, chances are you’ll love tools like Detect It Easy (DIE). What if similar functionality was built into YARA itself? I know a lot of the signatures that DIE use could be converted directly into YARA rules, but this would potentially make YARA more convenient to use when writing signatures for specific file types.

Modules everywhere

What ideas would you want to see for a YARA module?

Day 31 - YARA Tip - Hex values in text strings - Part 1

Did you know that you can put specific hex values in YARA text strings?

You can write YARA rules specifically for hexadecimal strings, as follows:

rule Hex_String_Test {

strings:

$ = {AB CD EF 00}

condition:

any of them

}

You can write an equivalent for this rule using text strings by prepending each byte with \x, as follows:

rule Hex_Chars_in_Text_String_Test {

strings:

$ = "\xAB\xCD\xEF\x00"

condition:

any of them

}

This rule is equivalent to the one above! It can be useful if you want to have a mixture of ASCII/non-ASCII characters in a text string (and some other benefits that I’ll go into tomorrow).

Thank you to David Cannings (@edeca) for teaching me this (among many other YARA tips/tricks over the years!).

Day 32 - YARA Tip - Hex values in text strings - Part 2

A benefit of writing hex values in text strings is that it allows you to apply modifiers to these strings. For example, if you wanted to look for all one-byte XOR values of {AB CD EF 00}, you would have to compute those values yourself and write a rule with all 255 versions of them:

rule One_Byte_XOR_Hex_Strings {

meta:

description = "Detects all one-byte XOR values of {AB CD EF 00}"

strings:

$key_01 = {aa cc ee 01}

$key_02 = {a9 cf ed 02}

// ... truncated for size ...

$key_fe = {55 33 11 fe}

$key_ff = {54 32 10 ff}

condition:

any of them

}

Instead of going to all this effort yourself, and having a rule that has 255 lines of strings, you can use the xor modifier like this:

rule One_Byte_XOR_Hex_Strings {

meta:

description = "Detects all one-byte XOR values of {AB CD EF 00}"

strings:

$ = "\xAB\xCD\xEF\x00" xor(0x01-0xff)

condition:

any of them

}

Both of these rules are equivalent in what they achieve/the efficiency in which they achieve it (the xor modifier will still have to generate and check for each variation as specified), but the second one is much more succinct and requires a lot less effort on your part.

Day 33 - YARA Tip - Hex values in text strings - Part 3

To really drive home the point about the use of hex values in text strings, here’s a final example using the Base64 modifier in YARA:

rule Reflective_Loader_Shellcode_Base64_Encoded {

meta:

description = "Detects Base64 encoded reflective loader shellcode stub, seen for example in Meterpreter samples"

hash = "ed48d56a47982c3c9b39ee8859e0b764454ab9ac6e7a7866cdef5c310521be19"

hash = "76d54a57bf9521f6558b588acd0326249248f91b27ebc25fd94ebe92dc497809"

hash = "1db32411a88725b259a7f079bdebd5602f11130f71ec35bec9d18134adbd4352"

strings:

// pop r10

// push r10

// push rbp

// mov rbp, rsp

// sub rsp, 20h

// and rsp, 0FFFFFFFFFFFFFFF0h

// call $+5

// pop rbx

$ = "\x4D\x5A\x41\x52\x55\x48\x89\xE5\x48\x83\xEC\x20\x48\x83\xE4\xF0\xE8\x00\x00\x00\x00\x5B" base64 base64wide

condition:

any of them

}

Here, I have taken the shellcode stub seen in Reflective loader samples, such as Meterpreter payloads. You can obviously just search for this hex string to find the PE/shellcode payloads; however given those may possibly only ever end up in memory, it might be better to hunt for the loaders as well.

So using the fact that we can use hex values in text strings, I’ve taken that shellcode stub, converted it to a text string, and applied the base64 and base64wide modifiers to find samples which have this shellcode Base64 encoded in them, which will catch PowerShell loaders for example!

This is one of those examples where you can take the string of one rule (i.e. finding Reflective Loader samples) and easily repurpose it for another one. You could also repeat this for one-byte XOR values too if you wanted!

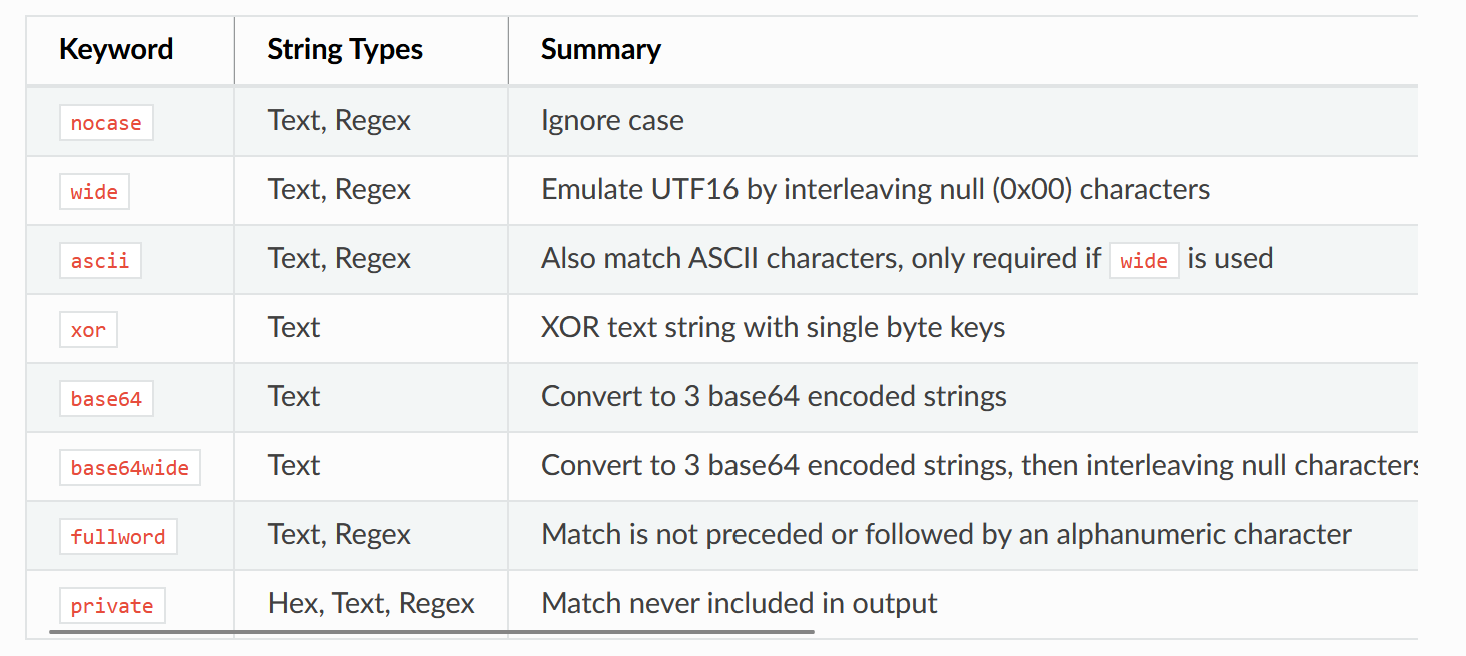

Day 34 - Text strings vs. Hexadecimal strings

I’ve just spent the last few posts suggesting how effective it can be converting hexadecimal strings into text strings. However, as is usually the case, there are tradeoffs to using this approach.

You may have noticed already that the strings I used in the previous rules are “fixed”; there are no wildcards or variable chunks. Yet, these are the most useful features of using hexadecimal strings, as it allows you to write rules for code where you apply wildcards to mask off specific bytes to write more generic rules!

Wildcards and variable chunks are not possible in YARA text strings; instead you would need to start using regular expressions, but this also then loses the ability to apply certain modifiers:

In summary, there’s usually going to be some tradeoff for the type of string you pick (text, hex, or regex) in YARA, but the more familiar you are with these tradeoffs, the better decisions you can make on which one to use!

Day 35 - YARA Atoms - Introduction

As you start getting more advanced in your usage of YARA, you will want to make sure you’re writing efficient rules. A good concept to understand in YARA is that of atoms, which are well explained in the code that implements them: https://github.com/VirusTotal/yara/blob/2b631d0ee47650923955398921c1ceccc3e38cb1/libyara/atoms.c

As the code points out:

Atoms are undivided substrings found in a regexps and hex strings. … When searching for regexps/hex strings matching a file, YARA uses these atoms to find locations inside the file where the regexp/hex string could match.

As such, if you are using using hex/regex strings which use wildcards/variable ranges, YARA will do some work behind the scenes to work out the best substrings to try and match the whole string you have specified. The code points out that sometimes this is straightforward, and you only need to search for one atom in a string to evaluate whether it matches. However, the more alternative sequences you add into your hex/regex strings (e.g. {ab cd (ef | 00)}), the more atoms may be needed to successfully evaluate a string match.

YARA will create an atom tree which it will then use to try and optimise using the least number of high quality atoms for searching in files. In the next post, we’ll discuss what makes good quality atoms.

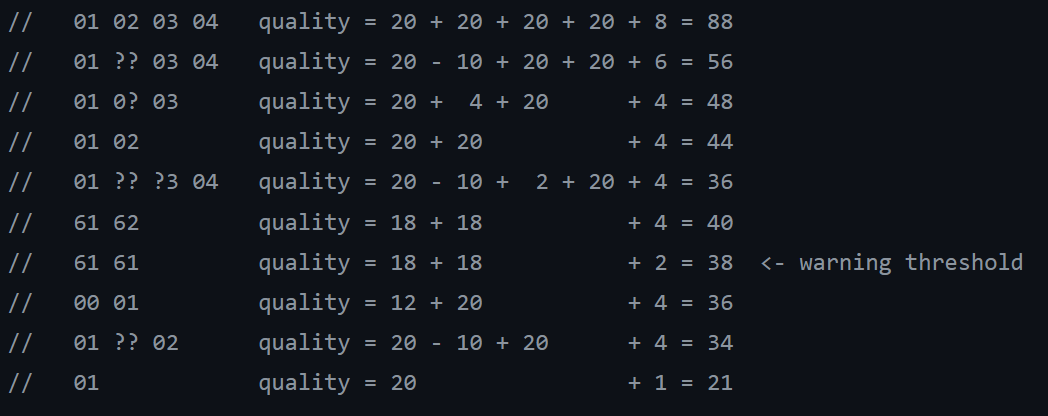

Day 36 - YARA Atoms - Scoring atoms

The YARA code helpfully describes the heuristics used to score atoms, and provides some examples: https://github.com/VirusTotal/yara/blob/2b631d0ee47650923955398921c1ceccc3e38cb1/libyara/atoms.c#L117

It is worth noting before discussing this that by default the maximum atom length (set in the variable YR_MAX_ATOM_LENGTH) is set to 4, and as such the code discusses examples which are 4 bytes in length.

In ascending order, the scores given to each byte in an atom are as follows:

- Any fully masked byte (i.e.

0x??): -10 points - Any partially masked byte (e.g.

0x0?,0x?F): 4 points - Common bytes (i.e.

0x00,0x20,0xFF): 12 points - Letters (i.e.

[A-Za-z]): 18 points - All other bytes: 20 points

An extra score is added at the end which consists of twice the number of unique bytes in the atom. There is also a hidden check which heavily penalises atoms if all the bytes are equal and common (e.g. {00 00 00 00} is worth 12 + 12 + 12 + 12 + 2 = 50, but then has 10 * atom->length = 40 subtracted, meaning it’s only worth 10 points overal).

Example scores can be seen in the docs:

A warning threshold is set to 38 points (a set value found here, which is used to compare against atom qualities here). Any strings with the “best” atom falling below this threshold will yield a warning from YARA, which will look like: string "$" may slow down scanning

Day 37 - YARA Atoms - Writing rules using strings with good atoms

So what’s the takeaway from this discussion of atoms? You want good atoms in all your strings, and this will be focused around substrings that are of maximum length 4 and scored according to the rules specified in the previous post.

So to ensure you’re writing efficient rules, make sure to:

- Avoid strings with very short substrings (e.g.

{AB ?? ?? ?? CD}will only be able to useAB ?? ?? ??or?? ?? ?? CDas an atom, which are below the threshold) - Avoid relying on short substrings of only common bytes (e.g.

{00 20}is below threshold) - Avoid too many partial masked bytes (e.g.

{A? ?B C? ?D}is below threshold)

Instead, try to use strings that you know have good length atoms, and that have unique bytes.

It’s okay in some instances to have strings with bad atoms in them, provided that you have good atoms to make up for it. E.g. {00 20} is a bad string on its own, but {AB CD EF 00 20} is a good string, as YARA can use the atom AB CD EF 00.

YARA will still work even if you have bad atom strings, but don’t ignore those string "$" may slow down scanning warnings! Hunt down the rules which are causing issues, and see if you can fix those strings - it’ll help you out in the long run.

Day 38 - Math Module - Intro

So far we’ve discussed a few different types of YARA modules, mostly around file parsing. However, there are several modules that can be used on any file type, the math module being one of them.

It is described as such in the docs:

The Math module allows you to calculate certain values from portions of your file and create signatures based on those results.

Personally I’ve found it trickier to write rules using these functions, as it may be quite circumstantial how you want to use them. Regardless, let’s see what we can do with some of the available functions.

To start with, I’d recommend reading Wesley Shields’ (@wxs) intro guide to the math module which gives some great examples and explanations: https://gist.github.com/wxsBSD/019740e83faa7a7206f4

Day 39 - Math Module - Entropy

The function that I’ve seen used (and which I’ve used) the most from the math module, is math.entropy(). This returns the entropy of the input, which is described as “information density of the contents of the file”. The higher number returned out (which goes up to a maximum of 8), this means the more dense it is with information, i.e. it is closer to being “random”.

As such, a high entropy can indicate “random” data, which in some scenarios could indicate that the data itself is encrypted (i.e. good encryption algorithms will make the ciphertext look as random as possible).